A beginners guide for Regression in Machine Learning

Regression : —

Regression falls under the supervised learning category in machine learning. In machine learning, It refers to predicting the continues outcomes, also known as Regression Analysis.

In supervised learning, we are already given the information about the outputs (labels) with respect to features given in a dataset.

Linear Regression : —

When we have a single attribute to predict with the help of the prediction model. This is known as the Linear Regression model.

A linear model makes a prediction by simply computing a weighted sum of the input features, plus a constant called the bias term (also called the intercept term).

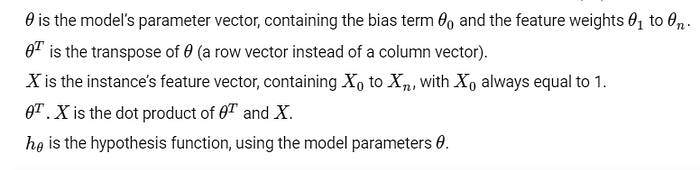

In vectorized form:

Where : —

So before starting training our model, we should first make clear about what actually “training a model” means.

In simple language : —

Training a model means we are setting the model’s parameter’s values nearest to the original model’s parameter’s value.

In machine learning : —

“Training a model means setting its parameters so that the model best fits the training set.”

Therefore to train a mode we need to find the value of θ that minimizes the MSE(mean squared error) or Cost function. Because it is simpler to minimize MSE than RMSE.

So now we know that we need to find the best parameters to fit our training set, but how? The answer is the Normal Equation.

Where : —

We can use the above equation for finding the optimal values of θ to minimize the cost function.

Enough of the definitions let’s see how we can implement these equations to our python code: —

Suppose we a have data-set consisting of only one feature and one target value to perform a linear regression, which can be given by as following : —

import matplotlib.pyplot as plt

import numpy as npX = 3 * np.random.rand(100, 1)

y = 6+ 4* X + np.random.randn(100, 1)plt.plot(X, y, "b.")

plt.xlabel("X", fontsize=14)

plt.ylabel("y", rotation=0, fontsize=14)plt.show()

Let’s plot both feature’s instances and their labels on the x-y graph:

Now we need to find the optimal values of θ to minimize our cost function. Updated code for finding the values of θ (Edited code is shown in bold): —

import matplotlib.pyplot as plt

import numpy as npX = 3 * np.random.rand(100, 1)

y = 6+ 4* X + np.random.randn(100, 1)# Adding the bias term to features in our datasetbias_term = np.ones((100, 1))

X_with_bias = np.c_[bias_term, X]# Training our model to find the best values of theta to best fit our training set (i.e. X, y)

theta_best = np.linalg.inv(X_with_bias.T.dot(X_with_bias)).dot(X_with_bias.T.dot(y))# Let's predict some values now

X_new = np.array([[0], [2]])

X_new_with_bias = np.c_[np.ones((2, 1)), X_new]

y_pred = X_new_with_bias.dot(theta_best)# Let's plot our regression model to see how well it fits the

# training dataplt.plot(X, y, "b.")

plt.plot(X_new, y_pred, "r-", label="Predictions")

plt.axis([0, 2, 0, 15])

plt.legend(loc="best", fontsize=14)

plt.xlabel("$X$", fontsize=14)

plt.ylabel("$y$", rotation=0, fontsize=14)

plt.show()

After running the above code you will get the following graph: —

This is really a good fit, but instead of going with this process, we have an easier way to perform Linear Regression.

We can use sci-kit learn’s sklearn.linear_model.LinearRegression library to find the same result as above.

from sklearn.linear_model import LinearRegressionlin_reg = LinearRegression()# Training the lin_reg model with X and y

lin_reg.fit(X, y)# Making predictions

X_new = np.array([[0], [2]])

y_pred = lin_reg.predict(X_new)plt.plot(X, y, "b.")

plt.plot(X_new, y_pred, "r-", label="Predictions")

plt.legend(loc="best", fontsize=14)

plt.xlabel("$X_1$", fontsize=14)

plt.ylabel("$y$", rotation=0, fontsize=14)

plt.show()

As you can see that both graphs are identical, so there is no need to develop an algorithm from scratch if we have the same algorithm in sci-kit-learn’s library.

If you liked it, please do some claps so I can enthusiastically write more about Machine Learning and other technologies.

Thank you for reading :)